Background:

One classic cause of civilization-ending apocalypse in science fiction is AI-initiated nuclear war, as variously depicted in Wargames (1983), Terminator (1984), Battlestar Galactica (2003), and other media.

The Issue:

Generally, humans don’t fare well in a post-nuclear-apocalypse setting, and would like to avoid it when possible.

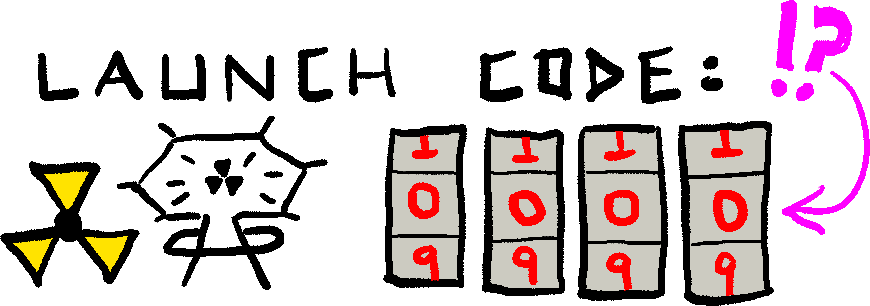

Unfortunately, security around nuclear launches may be inadequate (Figure 1): it was allegedly possible at one point to end the world with just the combination “0000 0000” (https://www.google.com/search?q=icbm+launch+code+00000000).

Additionally, as machine learning becomes more and more capable, it becomes tempting to delegate command of world-ending weaponry to A.I. systems: one can imagine a missile launch platform’s commander complaining: “Why do I have to keep typing ‘Y’ to confirm ‘MISSILE LAUNCH PROPOSED BY COMPUTER: ACCEPT? Y/N’ — it’s such a waste of time to manually confirm each one!”

It is likely that humans will either 1) suggest that the computer be given the authority to launch missiles without human intervention or 2) implement a lazy workaround to avoid having to confirm each launch (for example, a rock placed on the “Y” key).

Proposal:

What we need to do is to is to make it impossible for A.I. to initiate missile launches and to make the process just complicated enough that a human can’t just hit the “Y” key to confirm a proposed launch.

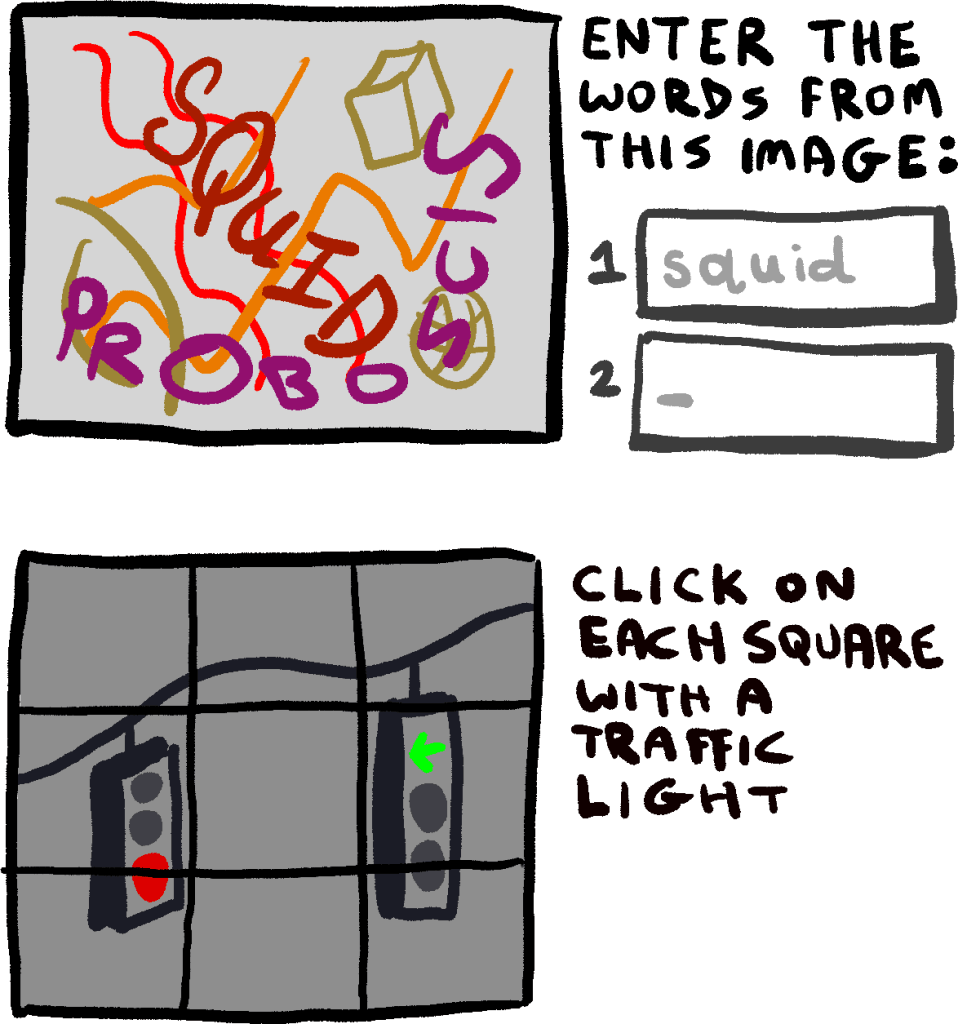

Fortunately, a large amount of work has gone into this field of research. Let’s examine the suitability of the so-called “CAPTCHA” verification test (Figure 2) for nuclear launch security.

CAPTCHAs were originally somewhat effective at being a “humans only” authorization system. Unfortunately, AI has developed so quickly that CAPTCHAS are much less effective.

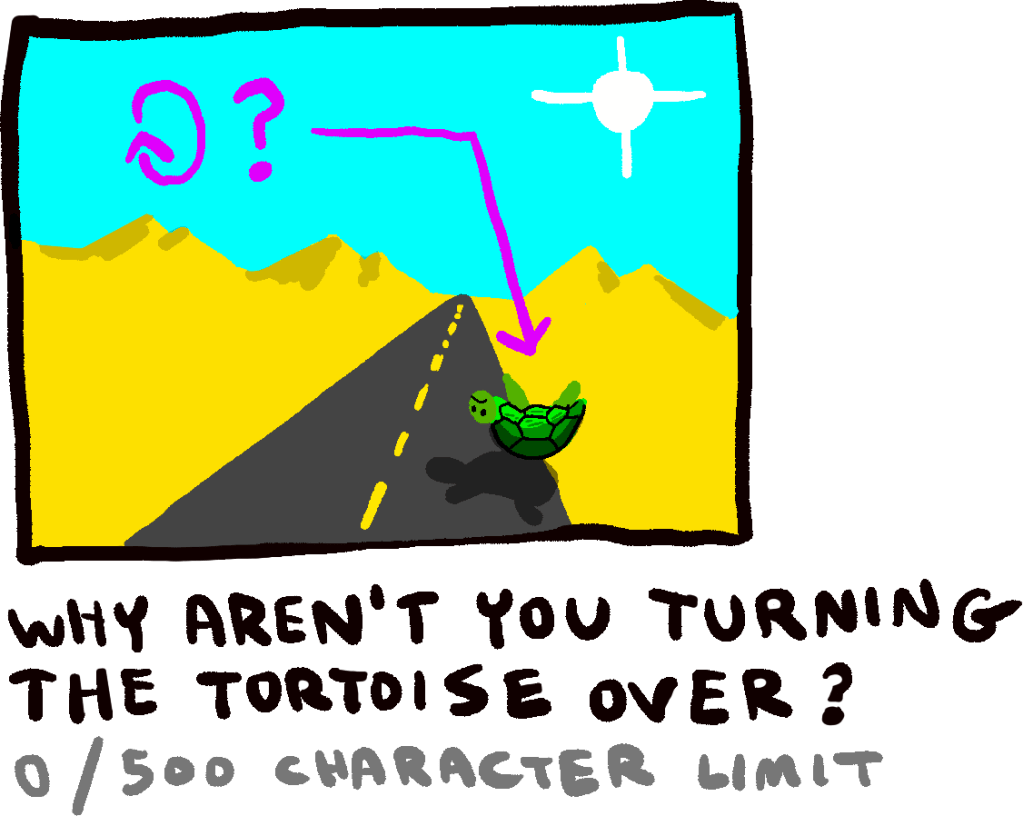

Luckily, there’s one more option: in the documentary Blade Runner (1982), we learn that humans are distinguishable from artificially-constructed intelligences (“replicants”) by a series of psychologically-intense questions, the most famous of which is shown in Figure 3).

It is unclear how exactly the free-form answers to the test above would be scored in order to distinguish humans from AIs, but perhaps it would become obvious if you make, say, 10 college undergraduate psychology majors and 10 different AIs take the test, and evaluate the differences in the responses.

Conclusion:

By securing the nuclear launch process with Blade Runner’s “Voight-Kampf test” (https://www.google.com/search?q=voight+kampf+test), we can ensure that it will be impossible for AI to cause a nuclear apocalypse*! Mission accomplished: nuclear arsenal secured!

[*] Unless it convinces at least one human in the entire world to work on its behalf.

PROS: Completely protects humans against the threat of AI-initiated nuclear apocalypse.

CONS: Might be annoying if you were the person whose job it was to launch the world-ending missiles, but you also had to click on a bunch of traffic lights or motorcycles in a grid or whatever—“Could this day get any worse???” you might say, as you tried to read the weird squiggly phrase “JUDGMENT DAY” off of a crummy highly-compressed JEPG image.

Originally published 2024-12-30.

You must be logged in to post a comment.